Project: Suppliers

Clear Cache

If you use the unique mode for the command walk, digger create special cache to avoid same URL to be visited multiple times in the multiple runs. Also special cache is created when you use an update mode for the object_save and object_check commands which is used to save the object only if its a new record or some data in record has been changed. So there are 2 types of cache: for a walk command - this is the URL cache, and for a object_save and object_check - this is the record cache. Both caches help you to retrieve only data you really need and skip what you already scraped before. It saves your time and resources.

For a good understanding, let's look at the work of the cache using the example of the walk command.

Suppose there is a config where we consistently go to two links:

---

config:

debug: 2

agent: Firefox

do:

- link_add:

url:

- https://www.diggernaut.com/sandbox/meta-lang-hash-table-en.html

- https://www.diggernaut.com/sandbox/meta-lang-normalize-tables-en.html

- walk:

to: links

mode: unique # UNIQUE MODE ENABLED

do:

Let's run this config, and once executed, both links will be written to the cache.

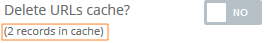

If you look at the cache cleaning section, you will see a hint:

Now, let's add another one to the existing list of links:

---

config:

debug: 2

agent: Firefox

do:

- link_add:

url:

- https://www.diggernaut.com/sandbox/meta-lang-hash-table-en.html

- https://www.diggernaut.com/sandbox/meta-lang-normalize-tables-en.html

- https://www.diggernaut.com/sandbox/meta-lang-object-en.html

- walk:

to: links

mode: unique # UNIQUE MODE ENABLED

do:

Run the digger again, look at the log and see the following messages:

Skip: https://www.diggernaut.com/sandbox/meta-lang-hash-table-en.html

Skip: https://www.diggernaut.com/sandbox/meta-lang-normalize-tables-en.html

Retrieving page (GET): https://www.diggernaut.com/sandbox/meta-lang-object-en.html

The digger ignored the previously collected links and took only the new one. There are now 3 records in the cache. If we once again try to start the digger with the same config, we will find out that the digger just ignore all 3 links.

In the similar way, the cache works for the command object_save. But it's a bit more complicated, it uses keys and checksums to track the records.

Pay attention!

The digger has a single cache for all sessions. It means that each time you start the digger, it will use same cache every time and modify it. If you need to start collecting data from scratch, you need to clear the cache.

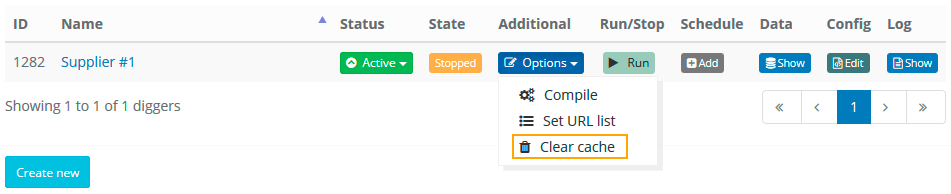

To clear the cache, select Clear cache:

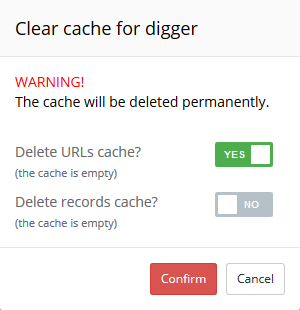

Select the type of cache you need to clear:

and press the button Confirm.

NextPay attention!

The cache will be deleted permanently.